ComfyUI and Real-Time Video AI Processing

The intersection of artificial intelligence and live video is an untapped opportunity for developers and technical teams. The need for sophisticated AI processing pipelines grows as video streaming becomes increasingly central to modern applications. ComfyUI, the most popular open-source AI-based image and video generation tool, has become a robust foundation for building complex workflows. However, it lacks robust real-time video support out of the box.

This is where ComfyStream comes in—an open-source project that runs alongside ComfyUI and leverages it as a backend inference engine to apply workflows to real-time video. By opening up live video as a new content type for ComfyUI, ComfyStream unlocks new possibilities. When paired with Livepeer's infrastructure to power these real-time video AI workflows, developers can build sophisticated applications that were previously impossible.

Understanding ComfyUI's Architecture

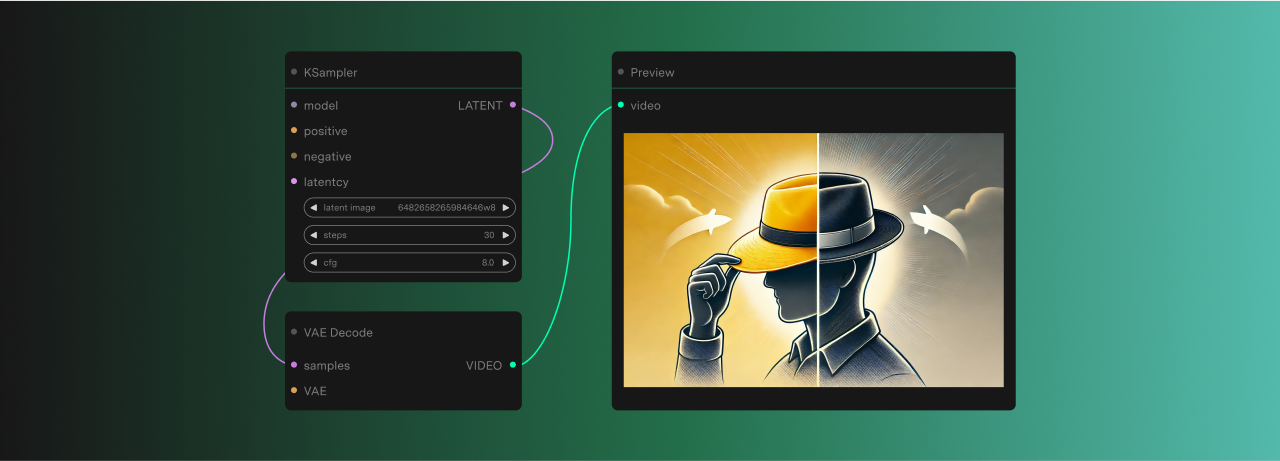

The Foundation: Node-Based Processing

ComfyUI's architecture is built on a directed acyclic graph (DAG) structure, where each node represents a discrete processing unit. This architectural choice can have profound implications for video processing workflows. Unlike traditional programming approaches that require developers to write and maintain complex code for each processing step, ComfyUI's node system visually represents the entire processing workflow.

Each node in the system maintains clear boundaries between its inputs, processing logic, and outputs. This separation of concerns makes it easier to understand, modify, and optimize individual components of a video processing pipeline. For instance, a node handling object detection can be swapped out or modified without affecting the nodes handling video input or output encoding.

The Power of Visual Workflows

The visual nature of ComfyUI's interface goes beyond mere convenience. It provides several key advantages for video processing:

1. Intuitive Workflow Design

- Traditional video processing often requires developers to map out complex processing chains mentally

- ComfyUI makes these relationships explicit and visible

- Allows teams to understand better and communicate about workflow architecture

- Helps set expectations of results

2. Real-Time Feedback

- Provides immediate visual feedback at each processing stage

- Particularly valuable when working with video

- Allows developers to identify issues before implementing expensive video workflow changes

- Helps spot processing bottlenecks or quality degradation

3. Rapid Iteration

- Traditional modification requires code changes, recompilation, and redeployment.

- ComfyUI's visual interface allows for rapid experimentation

- It makes optimizing for specific use cases easier

- Enables quick testing of different processing configurations

Video Processing: A Natural Fit

ComfyUI's architecture is particularly well-suited for video processing for several fundamental reasons:

Sequential Processing Alignment

Video processing is inherently sequential, with frames flowing through various processing stages. ComfyUI's node-based architecture naturally maps to this flow:

1. Frame Ingestion

- Input nodes are uniquely positioned to handle video stream ingestion complexity.

- Adapt to various input sources

- Handle both live streams and pre-recorded content

- Manage stream synchronization

2. Processing Chain

- Intermediate nodes perform specific processing tasks

- Handle object detection, style transfer, or frame analysis

- The visual connection between nodes shows frame manipulation flow

- Clear processing pathway visualization

3. Output Management

- Manages encoding tasks

- Handles streaming output

- Controls storage processes

- Provides optimization options

Integration with Livepeer Infrastructure

Livepeer's integration with ComfyUI creates a robust foundation for our Pipelines product. The Livepeer network already provides cost-effective GPU resources at scale, making it ideal for AI inference operations. With Livepeer's proven infrastructure for high-reliability, low-latency video streaming, this integration establishes a comprehensive real-time video AI processing platform. This combination addresses the core challenges of video AI processing at scale, providing creators and developers with the tools and infrastructure needed for production deployments.

Infrastructure Capabilities

1. Development Support

- Extensive library of pipeline templates

- Documented best practices

- Integration with output workflows

- Community-driven knowledge base

- Development environment mirrors the production

- Comprehensive API documentation

2. Distributed Processing

- Fully managed infrastructure

- Automatic scaling for workload demands

- Global distribution network

- High availability with SLAs

- Built-in security features

- Cost optimization

- Automatic updates and maintenance

3. Intelligent Deployment

- Local processing capabilities

- Reduced latency

- Optimized resource usage

Real-World Applications and Implementations

ComfyStream with Livepeer infrastructure enables a new generation of real-time video AI applications. Let's explore the key use cases that showcase the immediate potential of this technology:

High-Quality AI Video Filters for Live Streaming

Real-time AI filters represent a significant advancement over traditional video filters:

- Dynamic style transfer and artistic transformations

- Advanced visual effects powered by AI models

- Quality preservation at high frame rates

- Minimal latency for live applications

AI-Enhanced Live Virtual Performances

Transform live performances with real-time AI processing:

- Real-time performer transformation and augmentation

- Dynamic virtual environments and backgrounds

- Interactive visual effects responding to performer movements

- Seamless integration with existing streaming platforms

AI-Enhanced Interactive Game Shows

Create engaging live interactive experiences:

- Dynamic content generation based on game state

- Interactive visual effects triggered by viewer participation

- Automated scene composition and transitions

Conversational Avatars

Enable sophisticated avatar-based communication:

- Real-time facial expression mapping

- Dynamic avatar animation

- Emotion-aware visual responses

- High-quality video output for streaming and video calls

Real-Time Video AI Agents

Implement intelligent video agents that can:

- Process and respond to live video input

- Generate contextual visual content

- Maintain a consistent agent personality

- Interact naturally with users in real-time

Real-Time Virtual Try-On for Fashion

Enable interactive fashion experiences:

- Live garment visualization

- Real-time fit adjustment

- Dynamic style and color variations

- Multi-angle view processing

Additional Applications

While our immediate focus is on the above use cases, the platform also supports other sophisticated applications that will be developed in future releases:

Sports Analytics

- Multi-camera processing

- Player tracking and analysis

- Game state understanding

- Broadcast enhancement

Content Analysis at Scale

- Automated content understanding

- Quality assurance

- Metadata generation

- Large-scale processing capabilities

Summary

ComfyUI + ComfyStream combines live video with recent breakthroughs in generative AI, enabling new consumer experiences and a playground for open-source developers. Its integration with Livepeer creates a powerful platform for building and deploying sophisticated video processing pipelines. As the field continues to evolve, this combination of visual programming and scalable infrastructure will become increasingly valuable for developers and organizations working with video AI.