A Real-time Update to the Livepeer Network Vision

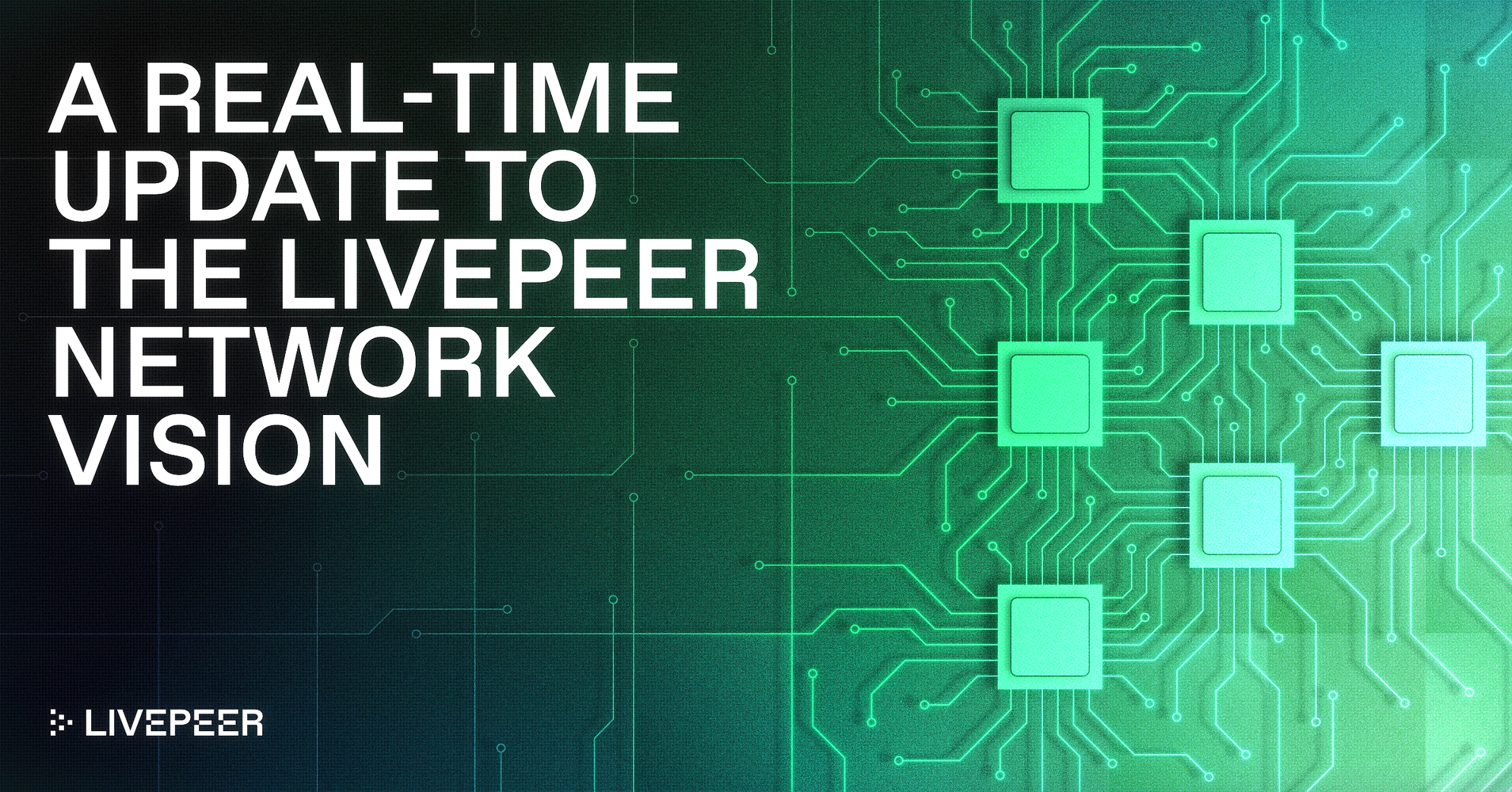

For the past year, the Livepeer Ecosystem has been guided by the Cascade vision: a path to transition from a pure streaming and transcoding infrastructure, to an infrastructure that could succeed at providing compute for the future of real-time AI video. The latest Livepeer quarterly report from Messari highlights that this transition is paying off, with network fees up 3x from this time last year, and over 72% of the fees now driven via AI inference. This is exemplified by the growing inspirational examples emerging from Daydream powered real-time AI, and real-time Agent avatar generation through Embody and the Agent SPE.

This shift has been an ecosystem wide effort – ranging from branding and communications, to productization and go to market, to hardware upgrades for orchestrators. It has successfully shifted the project under an updated mission and direction, however it has still left ambiguity in terms of what the Livepeer network itself offers as killer value propositions to new builders outside of the existing ecosystem. Is it a GPU cloud? A transcoding infra? An API engine? Now that there are signs of validation and accelerated momentum around an exciting opportunity, it’s time to really hone in on a refined vision for the future of the Livepeer network as a product itself.

The market for video is set to massively expand

The concept of live video itself is expanding well beyond a simple single stream of video captured from a camera. Now entire worlds and scenes are generated or enhanced in real-time via AI assistance, leading to more immersive and interactive experiences than possible via old-school streaming alone. For a taste of the future, see the following examples:

- The future of gaming will be AI generated video and worlds in real-time:

AI games are going to be amazing

— Matt Shumer (@mattshumer_) October 23, 2025

(sound on) pic.twitter.com/66aOdWJr4Y

- Video streams can be analyzed and data leveraged programmatically in real-time, for instant insight generation and decision making:

3 years since I joined roboflow

— SkalskiP (@skalskip92) October 29, 2025

- 68k stars on github

- 60 videos and streams on youtube

- 2.5M views in total

- 40 technical blogposts

↓ coolest stuff I made pic.twitter.com/EMy5qWq1Vp

- Real-time style transfer can enable avatars and agents to participate in the global economy:

🇨🇳 We’re screwed … it becomes indistinguishable from reality

— Lord Bebo (@MyLordBebo) October 30, 2025

Ali’s Wan 2.2 lets you stream without showing your face. It maps your voice and motion onto another face. pic.twitter.com/iD4nQIVaRY

Video world models and real-time AI video are merging, as they both use AI to generate frame-by-frame video output with low latency on the fly, based on user input and AI inference. This requires a tremendous amount of GPU compute, and requires an amazing low latency video streaming and compute stack – two areas in which the Livepeer network and community thrive, and two areas to which the many other generic GPU inference providers in the market bring no unique skillset, experience, or software advantage.

The big opportunity for the Livepeer network is to be the leading AI Infrastructure For Real-Time Video.

From interactive live streaming to generative world models, Livepeer’s open-access, low-latency network of GPUs will be the best compute solution for cutting edge AI video workflows.

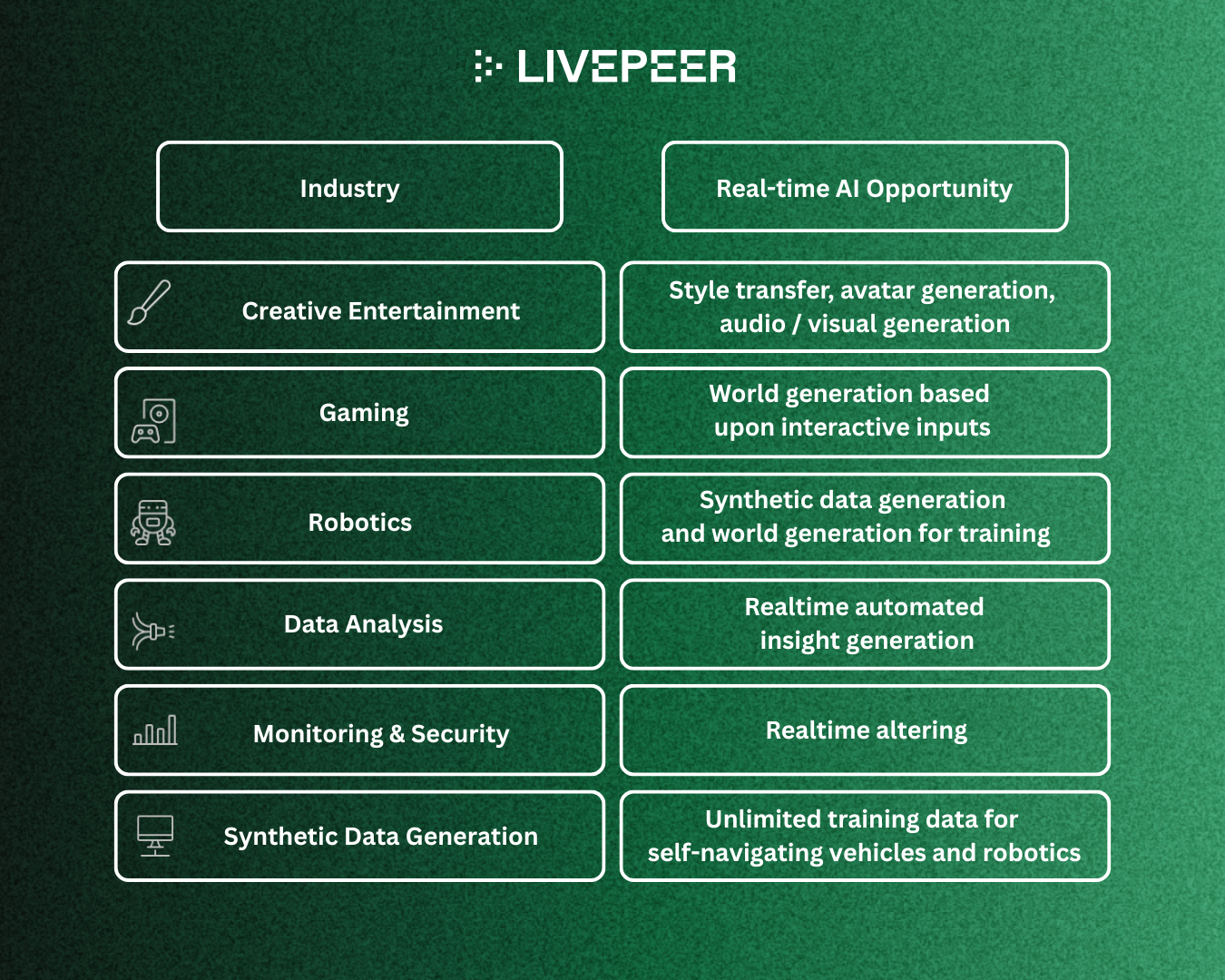

World models are a game changing category, and Livepeer is well suited to offer a unique and differentiated product here, that serves a huge market of diverse and varying use cases. These range from creative entertainment, to gaming, to robotics, to data analysis, to monitoring and security, to synthetic data generation for AGI itself.

While an ambitious stretch, Nvidia executives responsible for the category have even projected that due to the impact in robotics, the economic opportunity for world models could exceed $100 trillion, or approximately the size of the entire global economic output itself!

What does it mean to productize the Livepeer network to succeed as a valuable infrastructure in this category?

From a simplified viewpoint, it needs to deliver on the following:

1. Ability for users to deploy real-time AI workflows to the Livepeer network and request inference on them

2. Industry leading latency for providing inference on real-time AI and world model workflows.

3. Cost effective scalability – users can pay as they go to scale up and down capacity and the network automagically delivers the scale required.

Imagine a gaming platform is powering world-model generated games using their unique workflows that generate game levels or areas in a certain style by combining several real-time models, LLMs, and style transfer mechanisms. Each game its powering has users exploring and creating their own corners of the interactive worlds, based on prompts and gameplay inputs. Every gamer that joins a game represents a new stream of AI video compute, and the Livepeer network is the backing infrastructure that provides the compute for this video world generation, leveraging hundreds or thousands of GPUs concurrently.

For this to be possible the Livepeer network needs to enable that game platform to deploy their game generation workflow. It needs to offer low latency on the inference that runs this workflow, relative to the generic GPU compute clouds. The pricing needs to be competitive vs alternative options in the market for this GPU compute. And the network needs to allow this company to scale up and down the number of GPUs that are currently live ready to accept new real-time inference streams based on the number of users currently live on the games it is powering.

All of this is possible on the Livepeer network, and it isn’t far away from where we are now. If we work to build, test, and iterate on the Livepeer network itself towards supporting the latency and scale required for these types of workflows, we’ll be set up to power them.

Now multiply this example gaming company by the high number of diverse industries and verticals that real-time AI and world models will touch. Each category can have one or multiple companies competing to leverage this scalable and cost effective infrastructure for unique go to markets targeting different segments. And they can all be powered by the Livepeer network’s unique value propositions.

Livepeer’s core network is strategically positioned

What are these value propositions that make the Livepeer network differentiated relative to alternative options in the market? I’d argue that there are three primary, table stakes, must-have value propositions if Livepeer is to succeed.

1. Industry standard low latency infrastructure specializing in real-time AI and world model workflows: First of all, the network needs to let its users deploy custom workflows. Inference alone on base models is not enough and does not represent scaled demand. Users want to take base models, chain them together with other models and pre/post processors, and create unique and specialized capabilities. When one of these capabilities is defined as a workflow, that is the unit that needs to be deployed as a job on the Livepeer network, and the network needs to be able to run inference on it. Secondly, for these real-time interactive use cases, latency matters a lot. Generic GPU clouds don’t offer the specialized low latency video stacks to ingest, process, and serve video with optimal latency, but Livepeer does. And Livepeer needs to benchmark itself to have lower or equal latency to alternative GPU clouds for these particular real-time and world model use cases.

2. Cost effective scalability: GPU provisioning, reservations, and competing for scarce supply procurement creates major challenges for AI companies – often overpaying for GPUs that sit idle most of the time in order to guarantee the capacity that they need. The Livepeer network’s value proposition is that users should be able to “automagically” scale up almost instantly and pay on demand for the compute that they use, rather than having to pre-pay for reservations and let capacity sit idle. This is enabled by Livepeer taking advantage of otherwise existing idle longtail compute through its open marketplace, and its supply side incentives. The Livepeer network needs to be more cost effective than alternative GPU clouds within this category - with impacts comparable to the 10x+ cost reduction already demonstrated in live video transcoding delivered by the network.

3. Community driven, open source, open access: The Livepeer project and software stack is open source. Users can control, update, and contribute to the software they are using. They also can be owners in the infrastructure itself through the Livepeer Token, and can benefit from the network’s improvements and adoption, creating a network effect. The community that cares about its success and pushes it forward collectively, can be a superpower, relative to the uncertain and shaky relationship between builders and centralized platform providers, who have a history of getting rugged based on limitations to access, changes in functionality, or discontinuity of the platforms. Anyone can build on the Livepeer network regardless of location, jurisdiction, use case, or central party control.

The above are primary value propositions that should appeal to nearly all users. And we must work to close the gaps to live up to those value props before we could successfully hope to go to market and attract new vertical-specific companies to build directly on top of the network. Luckily, in addition to all of Livepeer’s streaming users, we have a great realtime AI design partner in Daydream, which is already going to market around creative real-time AI, using the network, and contributing to its development to live up to these requirements. While building with this design partner, the ecosystem should be working to productize to live up to these promises in a more generic perspective – it should be setting up benchmarks, testing frameworks, and building mechanisms for scaling up supply ahead of demand, so that it can represent this power to the world alongside successful Daydream case studies.

Opportunities to push towards this vision

To truly live up to these value propositions, there are a number of opportunities for the community to focus on in order to close some key gaps. There are many details to come in more technical posts laying out roadmaps and execution frameworks, but at a high level, consider a series of milestones that take the network as a product from technically functional, to production usable, to extensible, to infinitely scalable:

- Network MVP - Measure what matters: Establish key network performance SLAs, measure latency and performance benchmarks, and enhance the low latency client to support realtime AI workflows above industry grade standards.

- Network as a Product - Self adaptability and scalability: Network delivers against these SLAs and core value props for supported realtime AI workflows. Selection algorithms, failovers and redundancy, and competitive market price discovery established for realtime AI.

- Extensibility - Toolkit for community to deploy workflows and provision resources: Workflow deployment and signaling, LPT incentive updates to ensure compute supply for popular AI workflows exceeds demand.

- Parallel Scalability: Manage clusters of resources on the network for parallel workflow execution, truly unlocking job types beyond single-GPU inference.

Many teams within the ecosystem, from the Foundation, to Livepeer Inc, to various SPEs have already started operationalizing around how they’ll be contributing to milestones 1 and 2 to upgrade the network to deliver against these key realtime AI value propositions.

Conclusion and Livepeer’s opportunity

The market for the opportunity to be the GPU infrastructure that powers real-time AI and world models is absolutely massive – the compute requirements are tremendous - 1000x that of AI text or images - and real-time interaction with media represents a new platform that will affect all of the above-mentioned industries. The Livepeer network can be the infrastructure that powers it. How we plan to close the needed gaps and achieve this will be the subject of an upcoming post. But when we do prove these value propositions, Livepeer will have a clear path to 100x the demand on the network.

The likely target market users for the network are those startups that are building out vertical specific businesses on top of real-time AI and world model workflows. The ecosystem should look to enable one (or multiple!) startups in each category going after building real-time AI platforms that serve gaming, that serve robotics, that serve synthetic data generation, that serve monitoring and analysis, and all the additional relevant categories. The network’s value propositions will hopefully speak for themselves, but in the early stages of this journey, it is likely the ecosystem will want to use incentives (like investment or credits) to bootstrap these businesses into existence. Each will represent a chance at success, and will bring more demand and proof.

Ultimately, many users of these platforms may choose to build direct on the network themselves. Similarly to how startups start to build on platforms like Heroku, Netlify, or Vercel, and then as they scale and need more control and cost savings they build direct on AWS, and then ultimately move to their own datacenters after reaching even more scale – users of Daydream or a real-time Agent platform built on Livepeer, may ultimately choose to run their own gateways to recognize the cost savings and control and full feature set that comes from doing so. This is a good thing! As it represents even more usage and scale for the network, more proof that as an infrastructure the Livepeer network has product market fit, and that it can absorb all workflows directly. The businesses built on top will provide their own vertical specific bundles of features and services that onboard that vertical specific capacity, but they’ll be complemented by and enabled by the Livepeer Network’s superpowers.

While there’s a lot of work ahead, the Livepeer community has already stepped up to cover tremendous ground on this mission. At the moment by already powering millions of minutes of real-time AI inference per week, by our orchestrators already upgrading their capacity and procurement mechanisms to provide real-time AI-capable compute, and by the Foundation groups already working to evaluate the networks incentives and cryptoeconomics to sustainably fund and reward those contributing to this effort, we’re set up well to capture this enormous opportunity!